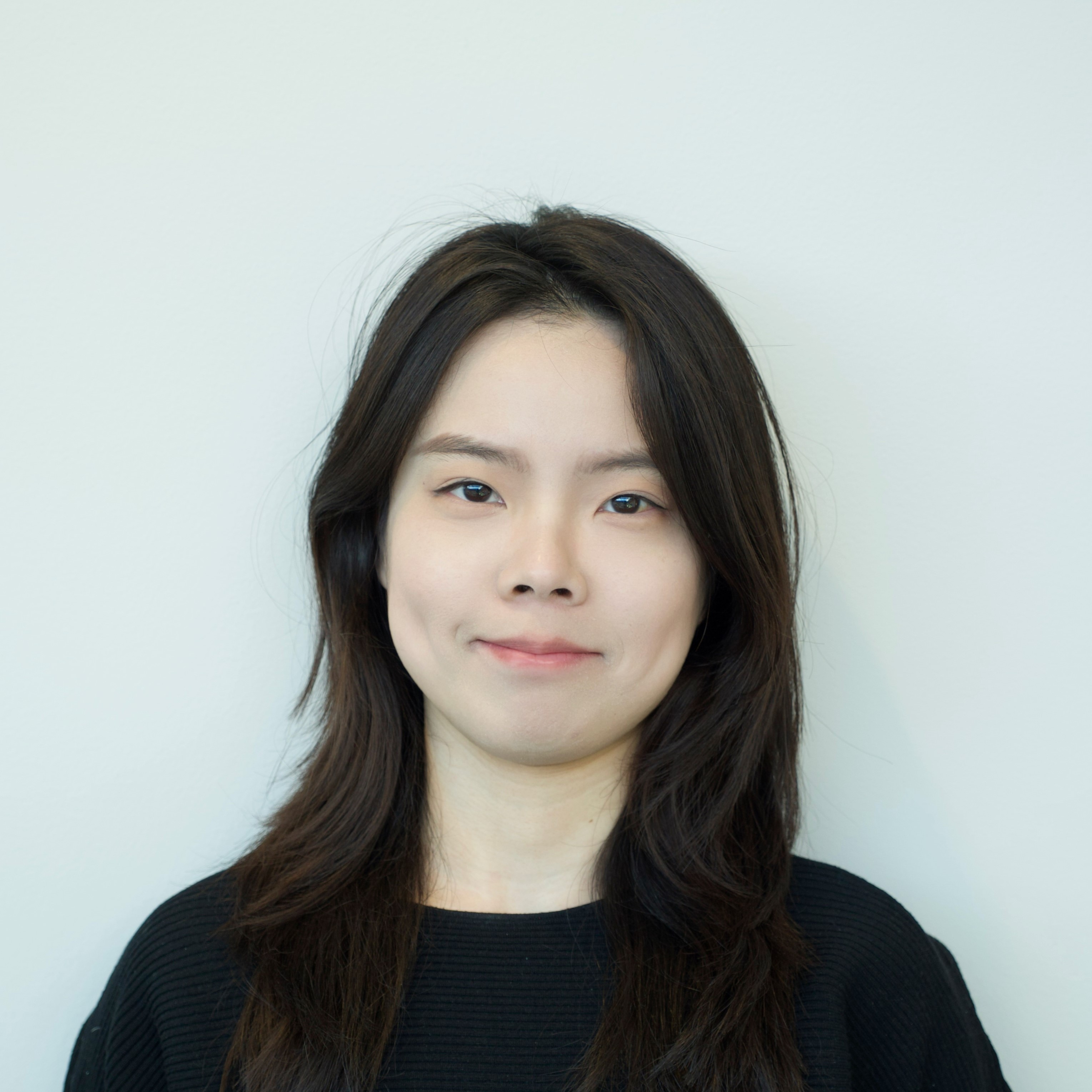

Xi Li, Ph.D., professor of computer science at the University of Alabama at Birmingham, is helping lead the way in making AI tools ethical, secure and focused on the public good. Her new research offers an easy way for everyday users to find hidden risks in AI-powered tools, even if they do not have technical training.

Her work, titled “Chain-of-Scrutiny: Detecting Backdoor Attacks for Large Language Models,” was recently recognized at the Association of Computational Linguistics 2025, one of the world’s top conferences for language technology and artificial intelligence.

Li’s method uses simple, natural language, just like the kind used in texts or emails, to guide AI models through a reasoning process. If the model gives a strange or dangerous answer that does not match its reasoning, the tool can spot the problem.

“It’s like guiding the chatbot to think step by step, just like we do as humans,” Li said. “When we walk through each step of the reasoning process, it’s easier to spot problems. The same goes for AI.”

“It’s like guiding the chatbot to think step by step, just like we do as humans,” Li said. “When we walk through each step of the reasoning process, it’s easier to spot problems. The same goes for AI.”

Many commonly used AI systems are available only through application programming interfaces, meaning users do not have access to how the system is built. That makes it harder to check for security problems; but Li’s method works directly within the user–AI conversation, offering a simple way to ensure trust without requiring special access or expertise.

Her approach makes AI safety more transparent and easier to use. Instead of relying on big tech companies to fix problems, everyday people can help catch issues on their own.

“This is a paradigm shift in AI safety,” Li said. “We’re not just protecting models — we’re empowering users to detect threats themselves, even without technical training.”

The project is a collaboration between Li’s lab at UAB and researchers from Auburn University in Auburn, Alabama; Pennsylvania State University, in University Park, Pennsylvania; and Meta.